Artificial intelligence (AI) has moved beyond experimentation to become a strategic imperative for organizations seeking competitive advantage. However, realizing the full potential of AI requires more than just innovative algorithms and vast datasets. It demands a robust operational framework, particularly within a Center of Excellence (CoE).

This article outlines the key processes, tools, and talent strategies necessary to operationalize AI excellence and drive tangible business value from your CoE.

Let’s break down the operationalization into 5 key areas:

Building the Operational Framework

A well-defined operational framework provides structure and consistency to your AI initiatives, ensuring they are delivered efficiently, ethically, and in alignment with business objectives.

AI Project Lifecycle Management

Establishing a standardized methodology for the AI project lifecycle is crucial. This includes clearly defined phases for:

- Discovery: Identifying business problems suitable for AI solutions and assessing their feasibility.

- Development: Building, training, and validating AI models.

- Deployment: Integrating models into production systems.

- Monitoring: Continuously tracking model performance and identifying the need for retraining or adjustments.

Implementing quality gates and approval processes at each stage ensures rigor and accountability. Furthermore, risk management and compliance should be integrated throughout the lifecycle to proactively address potential issues related to data security, bias, and regulatory requirements.

Governance and Ethics

A strong ethical foundation is paramount for sustainable AI adoption. This necessitates developing a comprehensive AI ethics framework that outlines principles for responsible AI practices, such as fairness, transparency, and accountability.

Model governance and lifecycle management are critical for tracking model lineage, ensuring reproducibility, and managing model versions. Moreover, stringent adherence to data privacy and regulatory compliance, such as Singapore’s Personal Data Protection Act (PDPA), is non-negotiable.

Defining the Technology Stack and Tools

The right technology stack empowers your AI CoE to build, deploy, and manage AI solutions effectively.

Core Platform Components

Investing in robust platform components is essential:

- MLOps and model management platforms: Streamlining the end-to-end machine learning lifecycle, including model training, deployment, monitoring, and governance.

- Data infrastructure and pipeline tools: Providing scalable and reliable infrastructure for data storage, processing, and the creation of efficient data pipelines.

- Development and collaboration environments: Facilitating seamless collaboration among team members with integrated development environments and version control systems.

Vendor vs. Build-or-Buy Decisions

Carefully evaluating whether to leverage off-the-shelf vendor solutions or build custom tools is crucial. Key evaluation criteria should include functionality, scalability, security, and ease of use. Integration with existing enterprise systems is a critical factor to avoid data silos and ensure seamless workflows. A thorough cost-benefit analysis framework should guide these decisions, considering both upfront investment and ongoing maintenance costs.

Create the Talent Strategy and Organizational Design

The success of your AI CoE hinges on attracting, developing, and retaining the right talent.

Core Roles and Responsibilities

Defining clear roles and responsibilities within the CoE is fundamental:

- Data scientists and ML engineers: Responsible for developing, training, and deploying AI models.

- AI solution architects and product managers: Defining the overall AI strategy and translating business needs into technical solutions.

- Domain experts and business analysts: Providing crucial domain knowledge and ensuring AI solutions address real business problems.

Hiring and Development

Given the scarcity of AI talent, implementing effective recruitment strategies is vital. This includes actively engaging with the AI community and exploring diverse talent pools. Simultaneously, investing in upskilling existing workforce through training programs can bridge skill gaps. Creating clear career paths and retention strategies is essential to keep valuable AI professionals within your organization.

Organizational Design

The optimal organizational design for your AI CoE depends on your company’s structure and culture. Common models include:

- Centralized: A single, dedicated AI team serving the entire organization.

- Federated: AI teams embedded within different business units, with a central coordinating function.

- Hybrid: A combination of centralized expertise and decentralized execution.

Regardless of the model, fostering cross-functional team formation ensures alignment between business needs and technical capabilities. Establishing clear performance management and incentives that recognize the unique contributions of AI roles is also important.

Focus and Prioritize Key Areas of Success

A strategic approach to identifying and prioritizing AI use cases is essential for maximizing impact.

Use Case Portfolio Management

Implementing a robust business impact assessment framework helps evaluate the potential value of different AI applications. This should be coupled with a technical feasibility analysis to assess the practicality of implementation. Based on these assessments, effective resource allocation strategies can be developed to focus on high-impact, feasible projects.

Domain-Specific Applications

AI can drive value across various business domains. Examples relevant to Singaporean businesses include:

- Customer experience and personalization: Utilizing AI to understand customer preferences and deliver tailored experiences.

- Operations and process optimization: Leveraging AI for automation, predictive maintenance, and supply chain optimization.

- Risk management and compliance: Employing AI for fraud detection, regulatory reporting, and risk assessment.

- Product and service innovation: Using AI to develop new AI-powered products and services.

Measuring ROI and Business Value

Demonstrating the tangible benefits of AI initiatives is crucial for securing continued investment and support.

Financial Metrics

Key financial metrics to track include:

- Cost savings and efficiency gains: Quantifying reductions in operational costs and improvements in efficiency achieved through AI adoption.

- Revenue generation and growth: Measuring the direct impact of AI-powered products and services on revenue.

- Investment return calculations: Assessing the overall financial return on AI investments.

Operational Metrics

Beyond financial metrics, track operational performance:

- Model performance and accuracy: Monitoring key metrics like precision, recall, and F1-score to ensure models are performing as expected.

- Time-to-deployment improvements: Measuring the efficiency of the AI deployment process.

- User adoption and satisfaction: Assessing how readily AI-powered tools and applications are being adopted and their impact on user satisfaction.

Strategic Metrics

Finally, consider strategic indicators of AI maturity:

- Competitive advantage indicators: Evaluating how AI initiatives are contributing to a stronger market position.

- Innovation pipeline health: Assessing the continuous flow of new AI ideas and projects.

- Organizational AI maturity progression: Tracking the overall development and integration of AI capabilities within the organization.

By focusing on these key areas – building a strong operational framework, selecting the right technology and tools, cultivating a skilled talent pool, prioritizing impactful use cases, and rigorously measuring value – your AI Center of Excellence can effectively operationalize AI excellence and drive significant business outcomes.

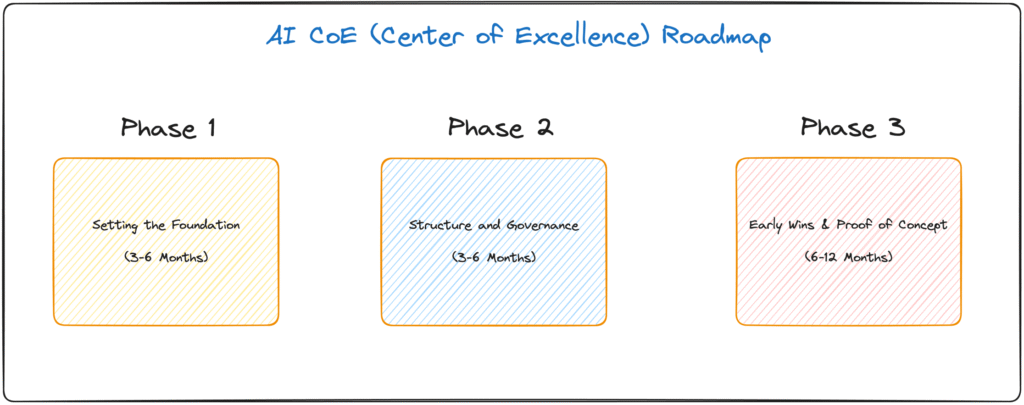

This article is part of a 3-part series on a strategic roadmap to establish your AI Center of Excellence (CoE). You can read the first post here.